🔗 Again on 0-based vs. 1-based indexing

André Garzia made a nice blog post called “Lua, a misunderstood language” recently, and unfortunately (but perhaps unsurprisingly) a bulk of HN comments on it was about the age-old 0-based vs. 1-based indexing debate. You see, Lua uses 1-based indexing, and lots of programmers claimed this is unnatural because “every other language out there” uses 0-based indexing.

I’ll brush aside quickly the fact that this is not true — 1-based indexing has a long history, all the way from Fortran, COBOL, Pascal, Ada, Smalltalk, etc. — and I’ll grant that the vast majority of popular languages in the industry nowadays are 0-based. So, let’s avoid the popularity contest and address the claim that 0-based indexing is “inherently better”, or worse, “more natural”.

It really shows how conditioned an entire community can be when they find the statement “given a list x, the first item in x is x[1], the second item in x is x[2]” to be unnatural. :) And in fact this is a somewhat scary thought about groupthink outside of programming even!

I guess I shouldn’t be surprised by groupthink coming from HN, but it was also alarming how a bunch of the HN comments gave nearly identical responses, all linking to the same writing by Dijkstra defending 0-based indexing as inherently better, as an implicit Appeal to Authority. (Well, I did read Dijkstra’s note years ago and wasn’t particularly convinced by it — not the first time I disagree with Dijkstra, by the way — but if we’re giving it extra weight for coming from one of our field’s legends, then the list of 1-based languages above gives me a much longer list of legends who disagree — not to mention standard mathematical notation which is rooted on a much greater history.)

I think that a better thought, instead of trying to defend 1-based indexing, is to try to answer the question “why is 0-based indexing even a thing in programming languages?” — of course, nowadays the number one reason is tradition and familiarity given other popular languages, and I think even proponents of 0-based indexing would agree, in spite of the fact that most of them wouldn’t even notice that they don’t call it a number zero reason. But if the main reason for something is tradition, then it’s important to know how did the tradition start. It wasn’t with Dijkstra.

C is pointed as the popularizer of this style. C’s well-known history points to BCPL by Martin Richards as its predecessor, a language designed to be simple to write a compiler for. One of the simplifications carried over to C: array indexing and pointer offsets were mashed together.

It’s telling how, whenever people go into non-Appeal-to-Authority arguments to defend 0-based indexes (including Dijkstra himself), people start talking about offsets. That’s because offsets are naturally 0-based, being a relative measurement: here + 0 = here; here + 1 meter = 1 meter away from here, and so on. Just like numeric indexes are identifiers for elements of an ordered object, and thus use the 1-based ordinal numbers: the first card in the deck, the second in the deck, etc.

BCPL, back in 1967, made a shortcut and made it so that p[i] (an index) was equal to p + i an offset. C inherited that. And nowadays, all arguments that say that indexes should be 0-based are actually arguments that offsets are 0-based, indexes are offsets, therefore indexes should be 0-based. That’s a circular argument. Even Dijkstra’s argument also starts with the calculation of differences, i.e., doing “pointer arithmetic” (offsets), not indexing.

Nowadays, people just repeat these arguments over and over, because “C won”, and now that tiny compiler-writing shortcut from the 1960s appears in Java, C#, Python, Perl, PHP, JavaScript and so on, even though none of these languages even have pointer arithmetic.

What’s funny to think about is that if instead C had not done that and used 1-based indexing, people today would certainly be claiming how C is superior for providing both 1-based indexing with p[i] and 0-based pointer offsets with p + i. I can easily visualize how people would argue that was the best design because there are always scenarios where one leads to more natural expressions than the other (similar to having both x++ and ++x), and how newcomers getting them mixed up were clearly not suited for the subtleties of low-level programming in C, and should be instead using simpler languages with garbage collection and without 0-based pointer arithmetic.

🔗 What’s faster? Lexing Teal with Lua 5.4 or LuaJIT, by hand or with lpeg

I ran a small experiment where I ported my handwritten lexer written in Teal (which translates to Lua) to lpeg, then ran the compiler on the largest Teal source I have (itself).

lexer time / total time LuaJIT+hw - 36 ms / 291 ms LuaJIT+lpeg - 40 ms / 325 ms Lua5.4+hw - 105 ms / 338 ms Lua5.4+lpeg - 66 ms / 285 ms

These are average times from multiple runs of tl check tl.tl, done with hyperfine.

The “lexer time” was done by adding an os.exit(0) call right after the lexer pass. The “total time” is the full run, which includes additional lexer passes on some tiny code snippets that are generated on the fly, so the change between the lpeg lexer and the handwritten lexer affects it a bit as well.

Looks like on LuaJIT my handwritten parser beats lpeg, but the rest of the compiler (lots of AST manipulation and recursive walks) seems to run faster on Lua 5.4.2 than it does on LuaJIT 2.1-git.

Then I decided to move the os.exit(0) further, to after the parser step but before the type checking and code generation steps. These are the results:

lexer+parser time LuaJIT+hw - 107 ms ± 20 ms LuaJIT+lpeg - 107 ms ± 21 ms Lua5.4+hw - 163 ms ± 3 ms Lua5.4+lpeg - 120 ms ± 2 ms

The Lua 5.4 numbers I got seem consistent with the lexer-only tests: the same 40 ms gain was observed when switching to lpeg, which tells me the parsing step is taking about 55 ms with the handwritten parser. With LuaJIT, the handwritten parser seems to take about 65 ms — what was interesting though was the variance reported by hyperfine: Lua 5.4 tests gave be a variation in the order of 2-3 ms, and LuaJIT was around 20 ms.

I re-ran the “total time” tests running the full tl check tl.tl to observe the variance, and it was again consistent, with LuaJIT’s performance jitter (no pun intended!) being 6-8x that of Lua 5.4:

total time LuaJIT+hw - 299 ms ± 25 ms LuaJIT+lpeg - 333 ms ± 31 ms Lua5.4+hw - 336 ms ± 4 ms Lua5.4+lpeg - 285 ms ± 4 ms

My conclusion from this experiment is that converting the Teal parser from the handwritten one into lpeg would probably increase performance in Lua 5.4 and decrease the performance variance in LuaJIT (with the bulk of that processing happening in the more performance-reliable lpeg VM — in fact, the variance numbers of the “lexer tests” in lpeg mode are consistent between LuaJIT and Lua 5.4). It’s unclear to me whether an lpeg-based parser will actually run faster or slower than the handwritten one under LuaJIT — possibly the gain or loss will be below the 8-9% margin of variance we observe in LuaJIT benchmarks, so it’s hard to pay much attention to any variability below that range.

Right now, performance of the Teal compiler is not an immediate concern, but I wanted to get a feel of what’s within close reach. The Lua 5.4 + lpeg combo looks promising and the LuaJIT pure-Lua performance is great as usual. For now I’ll keep using the handwritten lexer and parser, if only to avoid a C-based dependency in the core compiler — it’s nice to be able to take the generated tl.lua from the Github repo, drop it into any Lua project, call tl.loader() to register the package loader and get instant Teal support in your require() calls!

Update: Turns out a much faster alternative is to use LuaJIT with the JIT disabled! Here are some rough numbers:

JIT + lpeg: 327ms 5.4: 310ms JIT: 277ms 5.4 + lpeg: 274ms JIT w/ http://jit.off: 173ms JIT w/ http://jit.off + lpeg: 157ms

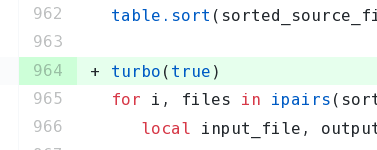

I have now implemented a function which disables the JIT on LuaJIT and temporarily disables garbage collection (which provides further speed ups) and merged it into Teal. The function was appropriately named:

Follow

🐘 Mastodon ▪ RSS (English), RSS (português), RSS (todos / all)

Last 10 entries

- Aniversário do Hisham 2025

- The aesthetics of color palettes

- Western civilization

- Why I no longer say "conservative" when I mean "cautious"

- Sorting "git branch" with most recent branches last

- Frustrating Software

- What every programmer should know about what every programmer should know

- A degradação da web em tempos de IA não é acidental

- There are two very different things called "package managers"

- Last day at Kong