🔗 Data Oriented Design, a.k.a. Lower Level Programming?

I’m not sure if this title is clickbaity, but it certainly summarizes some of the impressions I wanted to write about.

Yesterday I watched Andrew Kelley’s fun talk on Practical Data Oriented Design — do check it out! — and this post will contain some “spoilers” (as in, I will discuss his takeaways). I was drawn to the talk for two reasons: first, because I wanted to check if I was up-to-date on my programming TLAs, but also because he starts by talking about how he felt he had been stuck in a plateau as a programmer for the past decade — a feeling I’m sure many of us have felt at times! — and how this new knowledge got him out of it.

The bulk of the talk, and his takeways on refactoring his Zig compiler to use Data Oriented Design, is on how to get better runtime performance by making data structures smaller, so they are easier on the cache.

DOD techniques

Lots of the examples involved understanding struct alignment, to raise awareness of how much space gets wasted if you don’t take it into account. One way to deal with it includes replacing 64-bit pointers with 32-bit array indices (pointing out the assumption that we can only then have at most 4G items, which is often fair) and, most importantly, that type safety is lost once you no longer have a `MyStruct*` but just a `u32`. This comes along with moving from arrays of structures to structures of arrays, so you can pack data more tightly.

Another method is to apply “encodings” of data to avoid additional booleans in structs. Instead of an enum Creature { Elf, Orc } and a boolean isAlive, you do a enum Creature { AliveElf, DeadElf, AliveOrc, DeadOrc }, effectively moving that bit of data into the byte used by the enum. This is no different than packing structures using bitfields. Combining this with the switch to arrays, you can possibly even avoid using that bit altogether, by keeping two arrays dead_creatures and living_creatures.

As he went through the various examples of refactors to reach this goal, one by one I kept getting this sense of deja vu: “hey, this is how we used to program in the olden days!”

8-bit coding

If you look at how assembly for the 6502, the 8-bit processor used in the NES (my first game console) and the Apple II (my first computer!), you’ll see some of those tricks embedded in the processor design itself.

The 6502 is an 8-bit processor with a 16-bit address space: each instruction features a 1-byte opcode optionally followed by up to two bytes. Since the address space is 16-bits, addresses can go from 0 ($0000) to 65535 ($FFFF). So, to load a byte from memory position $1234 into the A register, you do a `LDA $1234`, which takes three bytes: `AD 34 12` (yes, the 6502 is little-endian!). However, to allow for more compact code, the first 256 bytes of memory have special processor support: addresses $0000 to $00FF, the “Zero Page”. So, just like in the enum trick for `AliveElf` and `DeadElf`, the “enum of opcodes” in the 6502 processor uses a separate number for loading from the Zero Page, so `LDA $0012` encodes into two bytes only: `A5 12`. This also reminds me of switching from pointers to integers, since that one-byte offset into the Zero Page is also a half-sized index that can be used given a set of assumptions.

Going from structs of arrays to arrays of structs is also a very old trick. In fact, I recall my earliest days of BASIC programming where we didn’t have structs and only had arrays, so storing each “attribute” in its own array was essentially the only way, so if I wanted to store x/y coordinates and a name for a bunch of characters, I’d have three arrays `XS`, `YS` and `NS$`. I also remember how, over time, using parallel arrays like this started to get frowned upon as “poor technique”, since arguably, code using arrays of structs is easier to read and maintain that that using structs of arrays, where you need to manually juggle more things in sync.

Refactoring for performance

And this is a common theme: all those old-school techniques being reframed in the talk as Data Oriented Design were in fact one day the norm, and they started to be phased out in the name of ease of development and maintenance. Yes, they do result in faster code — sometimes much faster code! — if you restructure your code to count each byte and optimize for cache usage. But a key word there is restructure. Writing code this way makes sense when you know how the data is be used, and how it will continue to be used. I was happy to see Andrew doing real-world measurements in his talk, and he correctly points out the assumptions involved, with comments such as “if we assume that most monsters are alive”, etc.

It’s very difficult to do this from the get-go, as you’re still iterating around your problem space. But once you know the typical behavior of the program, you can rework the data to match it. And yes, that will most likely give you a performance boost, but most often not without a cost in maintainability: how does that change in the structure changes the client code that uses it?

Further, how hard would it be to change it over again if the underlying assumptions change — for example, if the usage patterns change, if we port it over and the architecture changes, or if we need to add another bit of data into that structure. Sometimes those are important concerns, for example in a codebase of projects that change often and fast (think a startup evolving its product as market targets move), but sometimes projects reach a stage of maturity where you can step back, look at it and say: “Well, I think I have a good understanding of how this behaves now. What is the most memory-efficient representation for the data?”

Andrew’s case looks like a prime example for that. Once you get the tokenizer for a compiler done, you don’t really expect big seismical changes to its codebase (in fact, I think I could benefit from making some similar changes to my own Teal compiler!). In fact, a compiler is a perfect project for these kind of techniques: it’s fairly low-level and performance-critical code. If I recall correctly, Andrew used to work for a web company before Zig, so it makes sense that the style of code he gravitated towards before was higher-level than the one he’s excited about now.

What about maintenance

Optimizing code for performance always feels like a fun puzzle, but the maintenance cost is always in the back of my mind. Even in something like a compiler, making the code “as tight as possible” can backfire, if your implementation language does not allow for proper abstractions. The difficulties in adapting LuaJIT’s C codebase to the changes in newer versions of the Lua language come to mind. One such low-level trick in that codebase hinged on the fact that 32-bit address spaces were limited to 4GB, which allowed for some neat packing of data; that assumption, which was perfectly fair in the early 2000s, became central to the implementation. Of course, 64-bit systems arrived and assumptions changed. Getting rid of that limitation in a codebase full of smart data packing turned out to be a multi-year process.

Of course, if you can get a memory-efficient representation without hitting a maintenance cost, that’s the ideal situation. Some languages are better for this than others. I was impressed that Zig implements structs-of-arrays as MultiArrayList using apparently the same client interface as a regular ArrayList, such that changing from one to the other seems to be a “5-character change”. If you think of other languages that offer no such abstraction, that’s a much more impactful change throughout a codebase (think of all the places where you’d have to change a `monsters[i]->health` into `monster_healths[i]`, and how the memory management of those arrays and their contents change). I’ve also seen Edward Kmett pull some very cool tricks in Haskell combining super-efficient internal representations with very clean high-level abstractions.

In conclusion…

Still, I think it’s nice that some “old-school” techniques are getting a fresh coat of paint and are being revisited. We all benefit from being more performance conscious, and thinking about also means thinking about when to do it.

There’s something to be said about bringing back “old-school” techniques for programming, though, especially for those of us old enough to remember them: the trade-offs for modern architectures are definitely different. Andrew raises a good point about memoization vs. recomputation: the kinds of things you should choose to memoize when coding for the 6502 processor on an NES are very different than those for a modern x86-64. So it’s actually good that those things are being rethought over rather than just rehashed — there’s too much outdated advice out there, especially regarding performance.

The one piece of advice regarding performance that never goes old is: measure. And keep measuring, to see if the tricks you’re keen on using still make sense as the years go by! Another conclusion we get from this is that optimization and abstractions are not at odds with each other, but in fact, combining them, across language and application levels, is the right way to do it, so that we can keep the performance and the high-level code — but that’s probably a subject for another time!

🔗 The algorithm did it!

Earlier today, statistician Kareem Carr posted this interesting tweet, about what people out there mean when they say “algorithm”, which I found to be a good summary:

When people say “algorithms”, they mean at least four different things:

1. the assumptions and description of the model

2. the process of fitting the model to the data

3. the software that implements fitting the model to the data

4. The output of running that software

Unsurprisingly, this elicited a lot of responses from computer scientists, raising the point that this is not what the word algorithm is supposed to mean (you know, a well-defined sequence of steps transforming inputs into outputs, the usual CS definition), including a response from Grady Booch, a key figure in the history of software engineering.

I could see where both of them were coming from. I responed that Carr’s original tweet not was about what programmers mean when we say “algorithms” but what the laypeople mean when they say it or read it in the media. And understanding this distinction is especially important because variations of “the algorithm did it!” is the new favorite excuse of policymakers in companies and governments alike.

Booch responded to me, clarifying that his point is that “even most laypeople don’t think any of those things”, which I agree with. People have a fuzzy definition of what an algorithm is, at best, and I think Carr’s list encompasses rather well the various things that are responsible for the effects that people credit on a vague notion of “algorithm” when people use that term.

Booch also added that “it’s appropriate to establish and socialize the correct meaning of words”, which simultaneously extends the discussion to a wider scope and also focuses it to the heart of the matter about the use of “algorithm” in our current society.

You see, it’s not about holding on to the original meaning of a word. I’m sure a few responses to Carr were of the pedantic variety, “that’s not what the dictionary says!” kind of thing. But that’s short-sighted, taking a prescriptivist rather than descriptivist view of language. Most of us who care about language are past that debate now, and those of us who adhere to the sociolinguistic view of language even celebrate the fact language shifts, adapts and evolves to suit the use of its speakers.

Shriram Krishnamurthi, CS professor at Brown, joined in on the conversation, observing that this shift in the language as a fait accompli:

I’ve been told by a public figure in France (who is herself a world-class computer scientist) — who is sometimes called upon by shows, government, etc. — that those people DO very much use the word this way. As an algorithms researcher it irks her, but that’s how it is.

Basically, we’ve lost control of the world “algorithm”. It has its narrow meaning but it also has a very broad meaning for which we might instead use “software”, “system”, “model”, etc.

Still, I agreed with Booch that this is still a fight worth fighting. But not to preserve our cherished technical meaning of the term, to the dismay of the pedants among our ranks, but because of the observation of the very circumstances that led to this linguistic shift.

The use of “algorithm” as a vague term to mean “computers deciding things” has a clear political intent: shifting blame. Social networks boosting hate speech? Sorry, the recommendation algorithm did it. Racist bias in criminal systems? Sorry, it was the algorithm.

When you think about it, from a linguistic point of view, it is as nonsensical as saying that “my hammer assembled the shelf in my living room”. No, I did, using the hammer. Yet, people are trained to use such constructs all the time: “the pedestrian was hit by a car”. Note the use of passive voice to shift the focus away from the active subject: “a car hit a pedestrian” has a different ring to it, and, while still giving agency to a lifeless object, is one step closer to making you realize that it was the driver who hit the pedestrian, using the car, just like it was I who built the shelf, using the hammer.

This of course leads to the “guns don’t kill people, people kill people” response. Yes, it does, and the exact same questions regarding guns also apply regarding “algorithms” — and here I use the term in the “broader” sense as put forward by Carr and observed by Krishnamurthi. Those “algorithms” — those models, systems, collections of data, programs manipulating this data — wield immense power in our society, even, like guns, resulting in violence, and like guns, deserving scrutiny. And when those in possession of those “algorithms” go under scrutiny, they really don’t like it. One only needs to look at the fallout resulting from the work by Bender, Gebru, McMillan-Major and Mitchell, about the dangers of extremely large language models in machine learning. Some people don’t like hearing the suggestion that maybe overpowered weapons are not a good idea.

By hiding all those issues behind the word “algorithm”, policymakers will always find a friendly computer scientist available to say that yes, an algorithm is a neutral thing, after all, it’s just a sequence of instructions, and they will no doubt profit from this confusion of meanings. And I must clarify that by policymakers I mean those both in public and private sphere, since policies put forward by the private tech giants on their platforms, where we spend so much of our lives, are as effecting on our society as public policies nowadays.

So what do we do? I don’t think it is productive to start well-actually-ing anyone who uses “algorithm” in the broader sense, with a pedantic “Let me interject for a moment — what you mean by algorithm is in reality a…”. But it is productive to spot when this broad term is being used to hide something else. “The algorithm is biased” — What do you mean, the outputs are biased? Why, is the input data biased? The people manipulating that data created a biased process? Who are they? Why did they choose this process and not another? These are better interjections to make.

These broad systems described by Carr above ultimately run on code. There are algorithms inside them, processing those inputs, generating those outputs. The use of “algorithm” to describe the whole may have started as a harmless metonymy (like when saying “White House” to refer to the entire US government), but it has since been proven very useful as a deflection tactic. By using a word that people don’t understand, the message is “computers doing something you don’t understand and shouldn’t worry about”, using “algorithm” handwavily to drift people’s minds away from the policy issues around computation, the same way “cloud” is used with data: “your data? don’t worry, it’s in the cloud”.

Carr is right, these are all things encompassing things that people refer to as “algorithms” nowadays. Krishnamurthi is right, this broad meaning is a reality in modern language. And Booch is right when he says that “words matter; facts matter”.

Holding words to their stricter meanings merely due to our love for the language-as-we-were-taught is a fool’s errand; language changes whether we want it or not. But our duty as technologists is to identify the interplay of the language, our field, and society, how and why they are being used (both the language and our field!). We need to clarify to people what the pieces at play really are when they say “algorithm”. We need to constantly emphasize to the public that there’s no magic behind the curtain, and, most importantly, that all policies are due to human choices.

🔗 Compiler versus Transpiler: what is a compiler, anyway?

Teal was featured on HN today, and one of the comments was questioning the fact that the documentation states that it “compiles Teal into Lua”:

We need better and more rigorous terms in computing science. This use of the compiler word blurs the meaning of interpreted vs compiled languages.

I was under the assumption that it would generate executable machine code, not Lua source code.

I thought that was worth replying to because it allowed to dispel two misconceptions at once.

First, if we want to be rigorous about computer science terms, calling it “interpreted vs compiled languages” is a misnomer, because being interpreted or compiled is not a property of the language, but of the implementation. There have been things such as a C interpreter and an ahead-of-time compiler for PHP which generates machine code.

But then, we get to the main course, the use of “compiler”.

The definition of compiler has never assumed generating executable machine code. Already in the 1970s, Pascal compilers have generated P-code (a form of “bytecode” in Java parlance), which was then interpreted. In the 1980s, Turbo Pascal produced machine code directly.

I’ve seen the neologism “transpiler” being very frowned upon by the academic programming language community precisely because a compiler is a compiler, no matter the output language — my use of “compiler” there was precisely because of my academic background.

I remember people joking around on Academic PL Twitter jokingly calling it the “t-word” even. I just did a quick Twitter search to see if I could find it, and I found a bunch of references dating from 2014 (though I won’t go linking people’s tweets here). But that shows how out-of-date this blog post is! Academia has pretty much settled on not using the “compiler” vs. “transpiler” definition at all by now.

I don’t mind the term “transpiler” myself if it helps non-academics understand it’s a source-to-source compiler, but then, you don’t see people calling the Nim compiler, which generates C code then compiles it into machine code, a “transpiler”, even though it is a source-to-source compiler.

In the end, “compiler” is the all-encompassing term for a program that takes code in one language and produces code in another, be it high-level or machine language — and yes, that means that pedentically an assembler is a compiler as well (but we don’t want to be pedantic, right? RIGHT?). And since we’re talking assembler, most C compilers do not generate executable machine code either: gcc produces assembly, which is then turned into machine code by gas. So gcc is a source-to-source compiler? Is Turbo Pascal more of a compiler than gcc? I could just as well add an output step in the Teal compiler to produce an executable in the output using the same techniques of the Pascal compilers of the 70s. I don’t think that would make it more or less of a compiler.

As you can see, the distinction of “what is a transpiler” reduces to “what is source code” or “what is a high-level language”, the latter especially having a very fuzzy definition, so in the end my sociological observation on the uses of “transpiler” vs. “compiler” tends to boil down to people’s prejudices of “what makes it a Real, Hardcore Compiler”. But being a “transpiler” or not doesn’t say anything about the project’s “hardcoreness” either — I’m sure the TypeScript compiler which generates JavaScript is a lot more complex than a lot of compilers out there which generate machine code.

🔗 Again on 0-based vs. 1-based indexing

André Garzia made a nice blog post called “Lua, a misunderstood language” recently, and unfortunately (but perhaps unsurprisingly) a bulk of HN comments on it was about the age-old 0-based vs. 1-based indexing debate. You see, Lua uses 1-based indexing, and lots of programmers claimed this is unnatural because “every other language out there” uses 0-based indexing.

I’ll brush aside quickly the fact that this is not true — 1-based indexing has a long history, all the way from Fortran, COBOL, Pascal, Ada, Smalltalk, etc. — and I’ll grant that the vast majority of popular languages in the industry nowadays are 0-based. So, let’s avoid the popularity contest and address the claim that 0-based indexing is “inherently better”, or worse, “more natural”.

It really shows how conditioned an entire community can be when they find the statement “given a list x, the first item in x is x[1], the second item in x is x[2]” to be unnatural. :) And in fact this is a somewhat scary thought about groupthink outside of programming even!

I guess I shouldn’t be surprised by groupthink coming from HN, but it was also alarming how a bunch of the HN comments gave nearly identical responses, all linking to the same writing by Dijkstra defending 0-based indexing as inherently better, as an implicit Appeal to Authority. (Well, I did read Dijkstra’s note years ago and wasn’t particularly convinced by it — not the first time I disagree with Dijkstra, by the way — but if we’re giving it extra weight for coming from one of our field’s legends, then the list of 1-based languages above gives me a much longer list of legends who disagree — not to mention standard mathematical notation which is rooted on a much greater history.)

I think that a better thought, instead of trying to defend 1-based indexing, is to try to answer the question “why is 0-based indexing even a thing in programming languages?” — of course, nowadays the number one reason is tradition and familiarity given other popular languages, and I think even proponents of 0-based indexing would agree, in spite of the fact that most of them wouldn’t even notice that they don’t call it a number zero reason. But if the main reason for something is tradition, then it’s important to know how did the tradition start. It wasn’t with Dijkstra.

C is pointed as the popularizer of this style. C’s well-known history points to BCPL by Martin Richards as its predecessor, a language designed to be simple to write a compiler for. One of the simplifications carried over to C: array indexing and pointer offsets were mashed together.

It’s telling how, whenever people go into non-Appeal-to-Authority arguments to defend 0-based indexes (including Dijkstra himself), people start talking about offsets. That’s because offsets are naturally 0-based, being a relative measurement: here + 0 = here; here + 1 meter = 1 meter away from here, and so on. Just like numeric indexes are identifiers for elements of an ordered object, and thus use the 1-based ordinal numbers: the first card in the deck, the second in the deck, etc.

BCPL, back in 1967, made a shortcut and made it so that p[i] (an index) was equal to p + i an offset. C inherited that. And nowadays, all arguments that say that indexes should be 0-based are actually arguments that offsets are 0-based, indexes are offsets, therefore indexes should be 0-based. That’s a circular argument. Even Dijkstra’s argument also starts with the calculation of differences, i.e., doing “pointer arithmetic” (offsets), not indexing.

Nowadays, people just repeat these arguments over and over, because “C won”, and now that tiny compiler-writing shortcut from the 1960s appears in Java, C#, Python, Perl, PHP, JavaScript and so on, even though none of these languages even have pointer arithmetic.

What’s funny to think about is that if instead C had not done that and used 1-based indexing, people today would certainly be claiming how C is superior for providing both 1-based indexing with p[i] and 0-based pointer offsets with p + i. I can easily visualize how people would argue that was the best design because there are always scenarios where one leads to more natural expressions than the other (similar to having both x++ and ++x), and how newcomers getting them mixed up were clearly not suited for the subtleties of low-level programming in C, and should be instead using simpler languages with garbage collection and without 0-based pointer arithmetic.

🔗 What’s faster? Lexing Teal with Lua 5.4 or LuaJIT, by hand or with lpeg

I ran a small experiment where I ported my handwritten lexer written in Teal (which translates to Lua) to lpeg, then ran the compiler on the largest Teal source I have (itself).

lexer time / total time LuaJIT+hw - 36 ms / 291 ms LuaJIT+lpeg - 40 ms / 325 ms Lua5.4+hw - 105 ms / 338 ms Lua5.4+lpeg - 66 ms / 285 ms

These are average times from multiple runs of tl check tl.tl, done with hyperfine.

The “lexer time” was done by adding an os.exit(0) call right after the lexer pass. The “total time” is the full run, which includes additional lexer passes on some tiny code snippets that are generated on the fly, so the change between the lpeg lexer and the handwritten lexer affects it a bit as well.

Looks like on LuaJIT my handwritten parser beats lpeg, but the rest of the compiler (lots of AST manipulation and recursive walks) seems to run faster on Lua 5.4.2 than it does on LuaJIT 2.1-git.

Then I decided to move the os.exit(0) further, to after the parser step but before the type checking and code generation steps. These are the results:

lexer+parser time LuaJIT+hw - 107 ms ± 20 ms LuaJIT+lpeg - 107 ms ± 21 ms Lua5.4+hw - 163 ms ± 3 ms Lua5.4+lpeg - 120 ms ± 2 ms

The Lua 5.4 numbers I got seem consistent with the lexer-only tests: the same 40 ms gain was observed when switching to lpeg, which tells me the parsing step is taking about 55 ms with the handwritten parser. With LuaJIT, the handwritten parser seems to take about 65 ms — what was interesting though was the variance reported by hyperfine: Lua 5.4 tests gave be a variation in the order of 2-3 ms, and LuaJIT was around 20 ms.

I re-ran the “total time” tests running the full tl check tl.tl to observe the variance, and it was again consistent, with LuaJIT’s performance jitter (no pun intended!) being 6-8x that of Lua 5.4:

total time LuaJIT+hw - 299 ms ± 25 ms LuaJIT+lpeg - 333 ms ± 31 ms Lua5.4+hw - 336 ms ± 4 ms Lua5.4+lpeg - 285 ms ± 4 ms

My conclusion from this experiment is that converting the Teal parser from the handwritten one into lpeg would probably increase performance in Lua 5.4 and decrease the performance variance in LuaJIT (with the bulk of that processing happening in the more performance-reliable lpeg VM — in fact, the variance numbers of the “lexer tests” in lpeg mode are consistent between LuaJIT and Lua 5.4). It’s unclear to me whether an lpeg-based parser will actually run faster or slower than the handwritten one under LuaJIT — possibly the gain or loss will be below the 8-9% margin of variance we observe in LuaJIT benchmarks, so it’s hard to pay much attention to any variability below that range.

Right now, performance of the Teal compiler is not an immediate concern, but I wanted to get a feel of what’s within close reach. The Lua 5.4 + lpeg combo looks promising and the LuaJIT pure-Lua performance is great as usual. For now I’ll keep using the handwritten lexer and parser, if only to avoid a C-based dependency in the core compiler — it’s nice to be able to take the generated tl.lua from the Github repo, drop it into any Lua project, call tl.loader() to register the package loader and get instant Teal support in your require() calls!

Update: Turns out a much faster alternative is to use LuaJIT with the JIT disabled! Here are some rough numbers:

JIT + lpeg: 327ms 5.4: 310ms JIT: 277ms 5.4 + lpeg: 274ms JIT w/ http://jit.off: 173ms JIT w/ http://jit.off + lpeg: 157ms

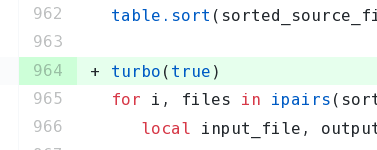

I have now implemented a function which disables the JIT on LuaJIT and temporarily disables garbage collection (which provides further speed ups) and merged it into Teal. The function was appropriately named:

Follow

🐘 Mastodon ▪ RSS (English), RSS (português), RSS (todos / all)

Last 10 entries

- Aniversário do Hisham 2025

- The aesthetics of color palettes

- Western civilization

- Why I no longer say "conservative" when I mean "cautious"

- Sorting "git branch" with most recent branches last

- Frustrating Software

- What every programmer should know about what every programmer should know

- A degradação da web em tempos de IA não é acidental

- There are two very different things called "package managers"

- Last day at Kong