🔗 Compiler versus Transpiler: what is a compiler, anyway?

Teal was featured on HN today, and one of the comments was questioning the fact that the documentation states that it “compiles Teal into Lua”:

We need better and more rigorous terms in computing science. This use of the compiler word blurs the meaning of interpreted vs compiled languages.

I was under the assumption that it would generate executable machine code, not Lua source code.

I thought that was worth replying to because it allowed to dispel two misconceptions at once.

First, if we want to be rigorous about computer science terms, calling it “interpreted vs compiled languages” is a misnomer, because being interpreted or compiled is not a property of the language, but of the implementation. There have been things such as a C interpreter and an ahead-of-time compiler for PHP which generates machine code.

But then, we get to the main course, the use of “compiler”.

The definition of compiler has never assumed generating executable machine code. Already in the 1970s, Pascal compilers have generated P-code (a form of “bytecode” in Java parlance), which was then interpreted. In the 1980s, Turbo Pascal produced machine code directly.

I’ve seen the neologism “transpiler” being very frowned upon by the academic programming language community precisely because a compiler is a compiler, no matter the output language — my use of “compiler” there was precisely because of my academic background.

I remember people joking around on Academic PL Twitter jokingly calling it the “t-word” even. I just did a quick Twitter search to see if I could find it, and I found a bunch of references dating from 2014 (though I won’t go linking people’s tweets here). But that shows how out-of-date this blog post is! Academia has pretty much settled on not using the “compiler” vs. “transpiler” definition at all by now.

I don’t mind the term “transpiler” myself if it helps non-academics understand it’s a source-to-source compiler, but then, you don’t see people calling the Nim compiler, which generates C code then compiles it into machine code, a “transpiler”, even though it is a source-to-source compiler.

In the end, “compiler” is the all-encompassing term for a program that takes code in one language and produces code in another, be it high-level or machine language — and yes, that means that pedentically an assembler is a compiler as well (but we don’t want to be pedantic, right? RIGHT?). And since we’re talking assembler, most C compilers do not generate executable machine code either: gcc produces assembly, which is then turned into machine code by gas. So gcc is a source-to-source compiler? Is Turbo Pascal more of a compiler than gcc? I could just as well add an output step in the Teal compiler to produce an executable in the output using the same techniques of the Pascal compilers of the 70s. I don’t think that would make it more or less of a compiler.

As you can see, the distinction of “what is a transpiler” reduces to “what is source code” or “what is a high-level language”, the latter especially having a very fuzzy definition, so in the end my sociological observation on the uses of “transpiler” vs. “compiler” tends to boil down to people’s prejudices of “what makes it a Real, Hardcore Compiler”. But being a “transpiler” or not doesn’t say anything about the project’s “hardcoreness” either — I’m sure the TypeScript compiler which generates JavaScript is a lot more complex than a lot of compilers out there which generate machine code.

🔗 Again on 0-based vs. 1-based indexing

André Garzia made a nice blog post called “Lua, a misunderstood language” recently, and unfortunately (but perhaps unsurprisingly) a bulk of HN comments on it was about the age-old 0-based vs. 1-based indexing debate. You see, Lua uses 1-based indexing, and lots of programmers claimed this is unnatural because “every other language out there” uses 0-based indexing.

I’ll brush aside quickly the fact that this is not true — 1-based indexing has a long history, all the way from Fortran, COBOL, Pascal, Ada, Smalltalk, etc. — and I’ll grant that the vast majority of popular languages in the industry nowadays are 0-based. So, let’s avoid the popularity contest and address the claim that 0-based indexing is “inherently better”, or worse, “more natural”.

It really shows how conditioned an entire community can be when they find the statement “given a list x, the first item in x is x[1], the second item in x is x[2]” to be unnatural. :) And in fact this is a somewhat scary thought about groupthink outside of programming even!

I guess I shouldn’t be surprised by groupthink coming from HN, but it was also alarming how a bunch of the HN comments gave nearly identical responses, all linking to the same writing by Dijkstra defending 0-based indexing as inherently better, as an implicit Appeal to Authority. (Well, I did read Dijkstra’s note years ago and wasn’t particularly convinced by it — not the first time I disagree with Dijkstra, by the way — but if we’re giving it extra weight for coming from one of our field’s legends, then the list of 1-based languages above gives me a much longer list of legends who disagree — not to mention standard mathematical notation which is rooted on a much greater history.)

I think that a better thought, instead of trying to defend 1-based indexing, is to try to answer the question “why is 0-based indexing even a thing in programming languages?” — of course, nowadays the number one reason is tradition and familiarity given other popular languages, and I think even proponents of 0-based indexing would agree, in spite of the fact that most of them wouldn’t even notice that they don’t call it a number zero reason. But if the main reason for something is tradition, then it’s important to know how did the tradition start. It wasn’t with Dijkstra.

C is pointed as the popularizer of this style. C’s well-known history points to BCPL by Martin Richards as its predecessor, a language designed to be simple to write a compiler for. One of the simplifications carried over to C: array indexing and pointer offsets were mashed together.

It’s telling how, whenever people go into non-Appeal-to-Authority arguments to defend 0-based indexes (including Dijkstra himself), people start talking about offsets. That’s because offsets are naturally 0-based, being a relative measurement: here + 0 = here; here + 1 meter = 1 meter away from here, and so on. Just like numeric indexes are identifiers for elements of an ordered object, and thus use the 1-based ordinal numbers: the first card in the deck, the second in the deck, etc.

BCPL, back in 1967, made a shortcut and made it so that p[i] (an index) was equal to p + i an offset. C inherited that. And nowadays, all arguments that say that indexes should be 0-based are actually arguments that offsets are 0-based, indexes are offsets, therefore indexes should be 0-based. That’s a circular argument. Even Dijkstra’s argument also starts with the calculation of differences, i.e., doing “pointer arithmetic” (offsets), not indexing.

Nowadays, people just repeat these arguments over and over, because “C won”, and now that tiny compiler-writing shortcut from the 1960s appears in Java, C#, Python, Perl, PHP, JavaScript and so on, even though none of these languages even have pointer arithmetic.

What’s funny to think about is that if instead C had not done that and used 1-based indexing, people today would certainly be claiming how C is superior for providing both 1-based indexing with p[i] and 0-based pointer offsets with p + i. I can easily visualize how people would argue that was the best design because there are always scenarios where one leads to more natural expressions than the other (similar to having both x++ and ++x), and how newcomers getting them mixed up were clearly not suited for the subtleties of low-level programming in C, and should be instead using simpler languages with garbage collection and without 0-based pointer arithmetic.

🔗 What’s faster? Lexing Teal with Lua 5.4 or LuaJIT, by hand or with lpeg

I ran a small experiment where I ported my handwritten lexer written in Teal (which translates to Lua) to lpeg, then ran the compiler on the largest Teal source I have (itself).

lexer time / total time LuaJIT+hw - 36 ms / 291 ms LuaJIT+lpeg - 40 ms / 325 ms Lua5.4+hw - 105 ms / 338 ms Lua5.4+lpeg - 66 ms / 285 ms

These are average times from multiple runs of tl check tl.tl, done with hyperfine.

The “lexer time” was done by adding an os.exit(0) call right after the lexer pass. The “total time” is the full run, which includes additional lexer passes on some tiny code snippets that are generated on the fly, so the change between the lpeg lexer and the handwritten lexer affects it a bit as well.

Looks like on LuaJIT my handwritten parser beats lpeg, but the rest of the compiler (lots of AST manipulation and recursive walks) seems to run faster on Lua 5.4.2 than it does on LuaJIT 2.1-git.

Then I decided to move the os.exit(0) further, to after the parser step but before the type checking and code generation steps. These are the results:

lexer+parser time LuaJIT+hw - 107 ms ± 20 ms LuaJIT+lpeg - 107 ms ± 21 ms Lua5.4+hw - 163 ms ± 3 ms Lua5.4+lpeg - 120 ms ± 2 ms

The Lua 5.4 numbers I got seem consistent with the lexer-only tests: the same 40 ms gain was observed when switching to lpeg, which tells me the parsing step is taking about 55 ms with the handwritten parser. With LuaJIT, the handwritten parser seems to take about 65 ms — what was interesting though was the variance reported by hyperfine: Lua 5.4 tests gave be a variation in the order of 2-3 ms, and LuaJIT was around 20 ms.

I re-ran the “total time” tests running the full tl check tl.tl to observe the variance, and it was again consistent, with LuaJIT’s performance jitter (no pun intended!) being 6-8x that of Lua 5.4:

total time LuaJIT+hw - 299 ms ± 25 ms LuaJIT+lpeg - 333 ms ± 31 ms Lua5.4+hw - 336 ms ± 4 ms Lua5.4+lpeg - 285 ms ± 4 ms

My conclusion from this experiment is that converting the Teal parser from the handwritten one into lpeg would probably increase performance in Lua 5.4 and decrease the performance variance in LuaJIT (with the bulk of that processing happening in the more performance-reliable lpeg VM — in fact, the variance numbers of the “lexer tests” in lpeg mode are consistent between LuaJIT and Lua 5.4). It’s unclear to me whether an lpeg-based parser will actually run faster or slower than the handwritten one under LuaJIT — possibly the gain or loss will be below the 8-9% margin of variance we observe in LuaJIT benchmarks, so it’s hard to pay much attention to any variability below that range.

Right now, performance of the Teal compiler is not an immediate concern, but I wanted to get a feel of what’s within close reach. The Lua 5.4 + lpeg combo looks promising and the LuaJIT pure-Lua performance is great as usual. For now I’ll keep using the handwritten lexer and parser, if only to avoid a C-based dependency in the core compiler — it’s nice to be able to take the generated tl.lua from the Github repo, drop it into any Lua project, call tl.loader() to register the package loader and get instant Teal support in your require() calls!

Update: Turns out a much faster alternative is to use LuaJIT with the JIT disabled! Here are some rough numbers:

JIT + lpeg: 327ms 5.4: 310ms JIT: 277ms 5.4 + lpeg: 274ms JIT w/ http://jit.off: 173ms JIT w/ http://jit.off + lpeg: 157ms

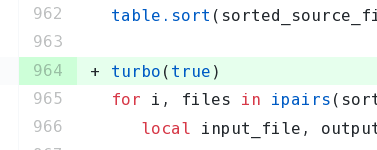

I have now implemented a function which disables the JIT on LuaJIT and temporarily disables garbage collection (which provides further speed ups) and merged it into Teal. The function was appropriately named:

🔗 Parsing and preserving Teal comments

The Teal compiler currently discards comments when parsing, and it constructs an abstract syntax tree (AST) which it then traverses to generate output Lua code. This is fine for running the code, but it would be useful to have the comments around for other purposes. Two things come to mind:

- JavaDoc/Doxygen/LDoc/(TealDoc?) style documentation generated from comments

- a code formatter that preserves comments

Today I spent some time playing with the lexer and parser and AST, looking into preserving comments from the input, with these two goals in mind.

The compiler does separate lexer and parser steps, both handwritten, and the comments were discarded at the lexer stage. That bit was easy. As I consumed each comment, I stored it as a metadata item to the following token (what if there’s no following token? I’m currently dropping the final comment if it’s the last thing in the file, but that would be trivial to fix).

The hard part of dealing with comments is what to do with them when parsing: the parser stage reads tokens and builds the AST. The question then becomes, to which nodes attach each comment as metadata. This is way trickier than it looks, because comments can appear everywhere:

--[[ comment! ]] if --[[ comment! ]] x --[[ comment! ]] < --[[ comment! ]] 2 --[[ comment! ]] then --[[ comment! ]] --[[ comment! ]] --[[ comment! ]] print --[[ comment! ]] ( --[[ comment! ]] x --[[ comment! ]] ) --[[ comment! ]] --[[ comment! ]] --[[ comment! ]] end --[[ comment! ]]

After playing a bit with a bunch of heuristics to determine to which AST nodes I should be attaching comments in attempts to preserving them all, I came to the conclusion that this is not the way to go.

The point of the abstract syntax tree is to abstract away the syntax, meaning that all those purely syntactical items such as then and end are discarded. So storing information such as “there’s a –[[ comment! ]] right before “then” and then another one right after, and then another in the following line—no, in fact two of them!—before the next statement” are pretty much antithetical to the concept of an AST.

However, there are comments for which there is an easy match as to which AST node they should attach. And fortunately, those are sufficient for the “TealDoc” documentation generation: it’s easy to catch the comment that appears immediately before a statement node and attach to it. This way, we get pretty much the whole relevant ground covered: type definitions, function declarations, variable declarations. Then, to build a “TealDoc” tool, it would be a matter of traversing the AST and then writing a small separate parser to read the contents of the documentation comments (e.g. reading `@param` tags and the like). I chose to attach comments to statements and fields in data structures, and to stop there. Especially for a handwritten parser, adding comment handling code can add to the noise quickly.

As for the second use-case, a formatter/beautifier, I don’t think that going through the route of the AST is the right way to go. A formatter tool could share the compiler’s lexer, which does read and collect all tokens and comments, but then it should operate purely in the syntactic domain, without abstracting anything. I wrote a basic “token dumper” in the early days of Teal that did some of this work, but it hasn’t been maintained — as the language got more complex, it now needs some more context in order to do its work properly, so I’m guessing it needs a state machine and/or more lookahead. But with the comments preserved by the lexer, it should be possible to extend it into a proper formatter.

The code is in the preserve-comments branch. So, I think with these two additions (comments fully stored by the lexer, and comments partially attached to the AST by the parser), I think we have everything we need to serve as a good base for future documentation and formatter tooling. Any takers on these projects?

🔗 User power, not power users: htop and its design philosophy

What the principles that underlie the software you make?

This short story is not really about htop, or about the feature request that I’ll use as an illustration, but about what are the core principles that drive the development of a bit of software, down to every last detail. And by “core principles” I really mean it.

When we develop software, we have to make a million decisions. We’re often driven by some unspoken general principles, ranging from our personal aesthetics on the external visuals, to our sense of what makes a good UX in the product behavior, to things such as “where does bloat cross the line” in the engineering internals. In FOSS, we’re often wearing all these hats at the same time.

We don’t always have it clear in our mind what drives those principles, we often “just know”. There’s no better opportunity to assess those principles than when user feedback asks for a change in the behavior. And there’s no better way to explain to yourself why the change “feels wrong” than to put those principles in writing.

Today was one such opportunity.

I was peeking at the htop issue tracker as an end-user, which is a refreshing experience, having recently retired from this FOSS project I started. I spotted a feature request, asking for a change to make it hide threads by default.

The rationale was sensible:

People casually using htop usually have no idea what userland threads are for.

People who actually need to see them can easily enable them via SHIFT+H.With them currently enabled by default, it is very inconvenient to go through the list and see what is running, taking up RAM, CPU usage and whatnot, therefore I think it’d be more user-friendly to not show them by default.

He proceeded to show two screenshots: one with the default behavior, full of threads (in green) mixed with the processes, and another with threads disabled.

When one of the current developers said that it’s easier for the user to figure out how to hide things than for them to discover that something hidden can be shown, the counter-argument was also sensible:

Htop can also show Disk IO, which can be arguably very useful, but is hidden by default.

At that point, I decided to put my “original author” hat on to explain what was the intent behind the existing behavior. Here’s what I wrote:

Hi @C0rn3j, I thought I’d drop by and give a bit of historical background as to what was my motivation for showing threads by default.

People casually using htop usually have no idea what userland threads are for.

Yes! I fully sympathize with this sentiment. And the choice for enabling threads and painting them green was deliberate.

You have no idea how many times I was asked “hey, why are some processes green?” over these 15+ years — and no, it wasn’t annoying: each of these times it was an opportunity to teach someone about threads!

htop was designed to provide a view to what’s going on in the system. If a process is spawning threads like crazy, or if all your cores are overwhelmed because multiple threads of a process are doing work, I think it’s fair to show it to the user, even if they don’t know a thing about threads — or I would say, especially if they don’t know a thing about threads, otherwise these things would be happening and they wouldn’t even know where to look.

Htop can also show Disk IO, which can be arguably very useful, but is hidden by default.

One of my last projects when I was still active in htop development was to add tabs to the interface, so that the user would have a more discoverable path for navigating through these additional columns:

This code is in the next branch of the old repo https://github.com/hishamhm/htop/ — I think the code for tabs is actually finished (though not super tested); it’s pretty nice, you can click on them or cycle with the Tab key, but the Perf Counters support which I was working on, never really got stable to a point of my liking, and that’s when I got busy with other things and drifted away from development — turns out I enjoy writing interface code a lot more than the systems monitoring part!

Anyway, I think that also illustrates the pattern that went into the design: I implemented the entire tabs feature because I wanted to make IO and Perf Counters more discoverable. I wanted to put that information in front of users faces, especially for users who don’t know about these things! I considered the fact that I had implemented the entire functionality of iotop inside htop but people didn’t know about it to be a personal failure, and a practical learning experience: adding systems functionality is useless if the UI design doesn’t get it to users’ hands. (And even I didn’t use the IO features because there was no convenient way of using them.)

I never wanted to implement a tool for “super advanced Linux power users who know what they doing”, otherwise I would have never spent a full line at the bottom showing F-keys, and I would have spent my time implementing Vim bindings (ugh ;) ) instead of mouse support. I’ve always made a point that every setting can be settable via the Setup screen, which you can access via F2-Setup (shown at the bottom line!) and which you can control with the keyboard or mouse, no “edit this config file to use this advanced feature for the initiated only” or even “read the man page” (in fact it only has a man page because Debian developers contributed it!).

I wanted to make a tool that has the power but doesn’t hide it from users, and instead invites users into becoming more powerful. A tool that reaches out its hand and guides them along the way, helping users to educate themselves about what’s happening on the internals of their system, and in this way have more control of it. This is how you hand power to users, not by erecting barriers of initiation or by giving them the bare minimum they need. I reject this dicothomy between “complicated tools for power users” and “stripped-down tools for mere mortals”, the latter being a design paradigm made popular by certain companies and unfortunately copied by so many OSS GUI projects, without realizing what the goals of that paradigm really were, but that’s another rant.

And that’s why threads are enabled by default, and colored in green!

(PS: and to put the point to the proof, I must say that the tabs feature was a much bigger code change than Perf Counters themselves; it included some quite big internal refactors (there’s no “toolkit”, everything is drawn “by hand” by htop) with unfortunately might make it difficult to ressurect that code (or not! who knows?), and of course tabs are user-definable and user-editable!)

The user who proposed the change in the defaults thanked me for the history tidbits and closed the feature request. But in some sense writing this down was probably more enlightening to me than it was for them.

When I started writing htop, I did not set out to create “an instrument for user empowerment” or any such lofty goal. (All I wanted was to have a top that scrolled and was more forgiving with mistypes than circa-2005 top!) But as I proceeded to write the software, every small decision had to come from somewhere, even if done without much deliberate thought, even if at the time it was just “it felt right”. That’s how your principles start to percolate into the project.

Over time, the picture I described in that reply above became clear to me. It helped me build practical guidelines, such as “every setting must be UI-accessible”, it helped me prioritize or even reject feature requests based on how much they aligned to those principles, and it helped me build a sense of purpose for the software I was working on.

If you don’t have it clear to yourself what are the principles that are foundational to the software you’re building, I recommend you to give this exercise a try — try to explain why the things in the software are the way they are. There are always principles, even if they are not conscious to you at the moment.

(PS: It would be awesome if some enterprising soul would dig down the tab support code and ressurrect it for htop 3! I don’t plan to do so myself any time soon, but all the necessary bits and pieces are there!)

Follow

🐘 Mastodon ▪ RSS (English), RSS (português), RSS (todos / all)

Last 10 entries

- Aniversário do Hisham 2025

- The aesthetics of color palettes

- Western civilization

- Why I no longer say "conservative" when I mean "cautious"

- Sorting "git branch" with most recent branches last

- Frustrating Software

- What every programmer should know about what every programmer should know

- A degradação da web em tempos de IA não é acidental

- There are two very different things called "package managers"

- Last day at Kong