🔗 Smart tech — smart for whom?

Earlier today I quipped on social media: “We need dumb tech and smart users, and not the other way around”.

Later, I clarified that I’m not calling users of “smart” devices dumb. People are smart. The tech should try to not “dumb them down” by acting condescendingly, cutting down on their agency and limiting their opportunities of education.

A few people shared replies to the effect that they wish for smart devices that wouldn’t be at odds with the intents of the user. After all, we all want the convenience of tech, so why settle for “dumb tech”, right?

The question here becomes a game of words: what is a “smart device”, after all?

A technically-minded person will would look at smart devices like smartphones, smart TVs, etc., and say “well, they are really computers”, or “they have computers inside”. But if we want to be technically pedantic, what is a computer? Having a Turing-complete microprocessor running programs? My old trusty microwave for sure has a microprocessor, but it’s definitely not a “smart device”. So it’s not about the internals, it definitely has to do with the end-user perception of the device.

The next reasonable approximation towards a definition is that a smart device allows you to install “apps”. You can extend it with more functionality (which is really making use of the fact that it’s a “computer inside”). Smart TVs and smart phones check this box. However, other home appliances like “smart kettles” don’t, the “smartness” comes from being internet-connected. So, generally, it looks like smart devices are things you either run apps in, or control via apps (from another smart device, of course).

So, allowing for running apps pretty much makes something into a computer, right? After all, a computer is a machine for running software. But it’s really interesting to think what is in fact a computer — where do we draw the line. From an end-user perspective, a game console is also a machine for running software — a particular kind of software, games — but it is not a computer. Is a Smart TV a computer? You can install apps in it. But they are also generally restricted to a certain kind of software: streaming services, video and the like.

Something doesn’t feel like a computer unless you can run any kind of software in it. This universality is a key concept. It’s interesting how we’re slowly driven back to the most fundamental definition of a computer, Alan Turing’s definition of a computer as a universal machine. Back in 1936, before the first actual computer was built during World War II, Turing wrote a philosophy section within a mathematics paper where he made this thought exercise of what it means to compute, and in his example he used the idea of a person doing the computations by hand: reading data, applying rules to process data, producing new data, repeat. Everything that computes follows this model: game consoles, the autopilot in an airplane, PCs, the microcontroller in my microwave. And though Turing had a technical notion of universality in mind, the key point for us here is that in our end-user understanding of a computer and what makes us call some things computers and others not, is that the program (or set of allowed programs) is not fixed, and this is what we see (and Turing saw) as universal: that any program that may be expressed in the computer’s language can be written and run on it, not just a finite set.

Are smart devices universal machines, then, in either sense of the word? Well, you can install new apps in them. Then, it can do new things it couldn’t yesterday. Does that make it a computer? What about game consoles? If I buy a new game (which is effectively new software!), it can also do new things, but you won’t really look at it as a computer. The reason is because you’re restricted on the kind of new software you can make this machine run: it only takes games, it’s not universal, at least from an end-user point of view.

But game consoles are getting “smarter” nowadays! They not only play games; maybe it will also have an app for showing you the weather, maybe it will accept some streaming service… but not others — and here we’re hinting at a key point of what “smart” devices are really like. They are, in fact, on the inside, universal machines that satisfy Turing’s definition. But they are not universal machines for you, the owner. For me, my Nintendo Switch is just a game console. For Nintendo, it is a computer: they can install any kind of software in it in their next software update. They can decide that it can play games, and also access Youtube, but not Netflix. Or they could change their mind tomorrow. From Nintendo’s perspective, the Switch is a universal machine, but not from mine.

The same thing happens, for example, with an iPhone. For Apple, it is a computer: they can run anything on it, the possibilities are endless. From the user’s perspective, it is a phone, into which you can install apps, and in fact choose from a zillion apps in the App Store. But the the possibilities, vast as they may be, are not endless. And that vastness doesn’t help much. From a user perspective, it doesn’t matter how many things you can do with something; what matters are what things you want to do with it, which of those you can and which ones you can’t. Apple still decrees what’s allowed and what isn’t in the App Store, and will also run their own software on the operating system, over which you have zero say. A Chromecast may also be a computer on the inside, with all the necessary networking and video capabilities, but Google has decided that it won’t let me easily play my movies with it, and so it can’t, just like that.

And such is the reality of smart devices. My Samsung TV is my TV, but it is Samsung’s computer. My house is filled, more and more, with computers over which I have no control. And they have control over what I can and what I can’t do with the devices I bought. From planned obsolescence, to collecting data on my habits and selling it, to complicating access to functionality that is there — the common thread in smart devices is that there is someone on the other side controlling the experience. And as we progress towards the “ever smarter”, with AI-based voice assistants being added to more devices, a significant part of that experience becomes the ways it “delights and surprises you“, or, in other words, your lack of control of it.

After all, if it wasn’t surprising, if it did just what you expected and nothing more, it wouldn’t be all that “smart”, right? If you take all the “smartness” away, what remains is a “dumb” device, an empty shell, waiting for you to tell it what to do. Press a button to do the thing, and the thing happens. Don’t press, it doesn’t do the thing. Install new functionality, the new functionality is installed. Schedule it to do the thing, it does when scheduled, like a boring old alarm clock. Use it today, it runs today. Put it away, pick it up to use it ten years from now, it runs ten years from now. No surprise upgrades. No surprises.

“But what about the security upgrades”, you ask? Well, maybe I just wanted to vacuum my living room. Can’t we design devices such that the “online” component isn’t an indispensable, always-on necessity? Of course we can. But then my devices wouldn’t be their computers anymore.

And why do they want our devices to be their computers? It’s not to run their software in it and free-ride on our electricity bill — all these companies more enough computers of their own than we can imagine. It’s about controlling our experience. Once the user has control over which software runs, they make the choices. Once they don’t, the choice is made for them. Whenever behavior that used to be user-controlled becomes automatic in a “smart” (i.e., not explicitly user-dictated) way, that is a way where a choice is taken away from you and driven by someone else. And they might hide choices behind “it was the algorithm”, which gives a semblance of impersonality and deniability, but putting the algorithm in place is a deliberate act.

Taking power away from the user is a deliberate act. Take social networks, for example. You choose who to follow to curate your timeline, but then they say “no, we want our algorithm to choose who to display in your timeline!”. Of course, this is always to “delight” you with a “better experience”; in the case of social networks, a more addictive one, in the name of user engagement. And with the lines between tech conglomerates and smart devices being more and more blurred, the effect is such that this control extends into our lives beyond the glass screens.

In the past, any kind of rant like this about the harmful aspects of any piece of tech could well be responded with a “just don’t use it, then!” In the world of smart devices, the problem is that this is becoming less of an option, as the fabric of our social and professional lives increasingly depends on these networks, and soon enough alternatives will not available. We are still “delighted” by the process, but just like, 15 years in, a smartphone is now just a phone, soon enough a smart kettle will be just a kettle, a smart vacuum will be just a vacuum and we won’t be able to clean our houses unless Amazon says it’s alright to do so. We need to build an alternative future, because I don’t want to go back to using a broom.

🔗 Protests and the space launch

I am not going to talk directly about the US protests. Instead, I will briefly note the role of the State in them, both as cause—promoting institutional racism—and as a continuing instigator. But aren’t the protests for justice, which supposedly needs to come from the State? But the government is clearly not interested in justice. So we have on one side the people, on the other side the State. But it didn’t have to be this way by definition: it’s _this_ particular state that’s the problem. And this makes me think of Peter Thiel.

Peter Thiel has said, in no uncertain terms, that he does not believe in democracy and ultimately wants the destruction of any form of State. That’s what he went up on stage in the elections for. That’s what the alt-right stands for. This is not a crisis, this is an ongoing plan. Historians will look at this as a multi-decade process, with the early 1980s under Reagan and Thatcher as the first inflection point, and the last few years with the alt-right, Trump and Brexit as the second one.

The first stage, neoliberalism, was about crippling the state in order to declare it inefficient and privatize it. In the 90s in particular this was sped up in practice and played down in discourse, but early on this was the stated goal.

Now at the second stage, it is no longer about crippling government institutions. It is about crippling the concept of government itself: get the incompetent to power, so that the supposed flaws of the democratic model become evident. Then offer something else.

Of course, the trickery there is that invariably the incompetent were led to power in various places by apparently legal but effectively criminal means: mass propaganda done in breach of campaign funding laws, voter supression, buying congress.

So now we’re at the stage where there’s a useful minority of radicalized fascists ensuring we get the worst possible government, and a mass of average people whose heroes are billionaires, ranging from your monopolist-turned-philantropist to your tweeting-techbro bigot. It may seem contradictory that the forces that are ultimately destroying the notion of nation-state ostensibly employ the discourse of nationalism. But if you pay attention, they are priming their base on adapting to the upcoming state of things.

This pandemic is the first time in history when we see a national crisis being addressed by the government by giving a press release and giving names of _companies_ who are going to be doing this and that to deal with the issue. This was very, very startling to see.

The notion that is up for companies and not the state to organize society is being normalized. America is at the forefront of this process, and has been for a long time. Other places still have things like functioning health and educational systems, but the pressure exists. In that sense, the landmark space launch — once a matter of national pride, now privatized into another triumph of business over country — led by Thiel’s associate Musk, is not at all disconnected from the ongoing events. They are deeply, closely connected.

Thiel’s company Palantir — named after the all-seeing eye in LOTR, another techbro favorite — provides machinery for mass surveillance in the the US. The surveillance people worldwide are under is already managed by a private company.

When you think of Russia and its oligarchs, or the growing number of billionaires in China’s parliament — why lobby the middleman, just buy a Congress seat for yourself — we see that the increasingly direct control of superpowers by businessmen is not an American-only phenomenon. It might be that the façade of a state will stick around for long, as a useful device as people cling to their flags and anthems, but I feel the foundations of the modern nation-state slowly crumble into dust under my feet, and I do not like what’s taking its place.

Zygmunt Bauman saw it back in the 1990s: in a world of companies, we’re no longer citizens, only consumers. And the rippling effects of this are much worse than they initially sound, but this is a conversation for another time.

🔗 Remembering Windows 3.1 themes and user empowerment

This reminiscence started reading a tweet that said:

Unpopular opinion: dark modes are overhyped

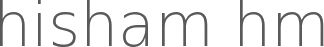

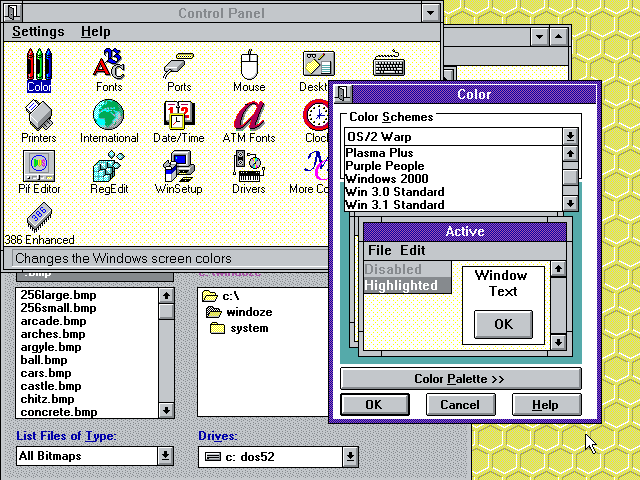

Windows 3.1 allowed you to change all system colors to your liking. Linux been fully themeable since the 90s. OSX came along with a draconian “all blue aqua, and maybe a hint of gray”.

People accepted it because frankly it looked better than anything else at the time (a ton of Linux themes were bad OSX replicas). But it was a very “Ford Model T is available in any color as long as it’s black” thing.

The rise of OSX (remember, when it came along Apple had a single-digit slice of the computer market) meant that people eventually got used to the idea of a life with no desktop personalization. Nowadays most people don’t even change their wallpapers anymore.

In the old days of Windows 3.1, it was common to walk into an office and see each person’s desktop colors, fonts and wallpapers tuned to their personalities, just like their physical desk, with one’s family portrait or plants.

|

|

|

I just showed the above screenshots to my sister, and she sighed with a happy nostalgia:

— Remember changing colors on the computer?

— Oh yes! we would spend hours having fun on that!

— Everyone’s was different, right?

— Yes! I’d even change it according to my mood.

Looking back, I feel like this trend of less aesthetic configurability has diminished the sense of user ownership from the computer experience, part of the general trend of the death of “personal computing”.

I almost wrote that a phone UI allows for more self-expression today than a Win/Mac computer. But then I realized how much I struggled to get my Android UI the way I wanted, until I installed Nova Launcher that gave me Linux-levels of tweaking. The average user does not do this.

But at least they are more likely to change wallpaper in their phones than their computers. Nowadays you walk into an office and all computers look the same.

The same thing happened to the web, as we compare the diminishing tweakability of a MySpace page to the blue conformity a Facebook page, for example.

Conformity and death of self-expression are the norm, all under the guise of “consistency”.

User avatars forced into circles.

App icons in phones forced into the same shape.

Years ago, a friend joked that the inconsistency of the various Linux UI toolkits was how he felt the system’s “freedom”. We all laughed and wished for a more consistent UI, of course. But that discourse on consistency was quickly coopted to remove users’ agency.

What begins with aesthetics and the sense of self-expression, continues to a lack of ownership of the computing experience and ends in the passive acceptance of systems we don’t control.

Changes happen, but those are independent from the users’ wishes, and it’s a lottery whether the changes are for better or for worse.

Ever notice how version changes are called “updates” and not “upgrades” anymore?

In that regard, I think Dark Mode is a welcome addition as it allows a tiny bit of control and self-expression to the user, but it’s still kinda sad to see how far we regressed overall.

The hype around it, and how excited users get when they get such crumbles of configurability handed to them, just comes to show how users are unused to getting any degree of control back in their hands.

🔗 On the word “latino”

One of my least-favorite American English words is “latino”, for two reasons:

First, a linguistic reason: because it’s not inflected when used. When you’re used to the fact that in Spanish and Portuguese “latino” refers only to men and “latina” only to women, hearing “latino woman” sounds really weird (weirder than, say, “handsome woman”). Even weirder “latino women”, mixing a Spanish/Portuguese word and English grammar. “Bonito girls”? :)

Second, a sociological reason: because using a foreign loanword reinforces the otherness. Nobody calls the Italian community in America “italiano”, although that’s their name in Italian. The alternative “Hispanic” is not ideal because it doesn’t really make sense when including Brazil, which was never a Spanish colony (plus, the colonial past is something most countries want to leave behind).

I can’t change the language by myself, so I just avoid the term and use more specific ones whenever possible (Colombians, Argentines, Brazilians, South Americans, Latin Americans when referring to people from the area in general, etc.)

After writing the above, I checked Wikipedia and it seems the communites in the US agree with me:

« In a recent study, most Spanish-speakers of Spanish or Hispanic American descent do not prefer the term “Hispanic” or “Latino” when it comes to describing their identity. Instead, they prefer to be identified by their country of origin. When asked if they have a preference for either being identified as “Hispanic” or “Latino,” the Pew study finds that “half (51%) say they have no preference for either term.”[43] A majority (51%) say they most often identify themselves by their family’s country of origin, while 24% say they prefer a pan-ethnic label such as Hispanic or Latino. Among those 24% who have a preference for a pan-ethnic label, “‘Hispanic’ is preferred over ‘Latino’ by more than a two-to-one margin—33% versus 14%.” Twenty-one percent prefer to be referred to simply as “Americans.” »

I think the awkwardness in the grammar from point one actually reinforces point two, because it strikes me as something that no Spanish or Portuguese native speaker would come up with by themselves. So it sounds tacked upon.

Don’t get me wrong, I fully identify as a Brazilian, a South American and a Latin American — travellling abroad helps a lot to widen your cultural identity! — and I have no problem when people wear the term “latino” proudly, but I always pay close attention to the power of language and how it represents and propagates ideas.

Follow

🐘 Mastodon ▪ RSS (English), RSS (português), RSS (todos / all)

Last 10 entries

- Aniversário do Hisham 2025

- The aesthetics of color palettes

- Western civilization

- Why I no longer say "conservative" when I mean "cautious"

- Sorting "git branch" with most recent branches last

- Frustrating Software

- What every programmer should know about what every programmer should know

- A degradação da web em tempos de IA não é acidental

- There are two very different things called "package managers"

- Last day at Kong